CG -- The Method of Steepest Descent

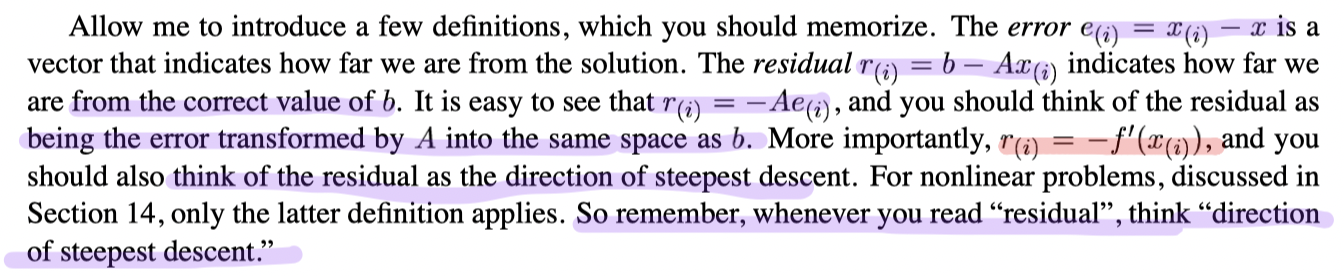

Definitions

Whenever you read "residual", think "direction of steepest descent"

Procedure

- Starting from an initial point

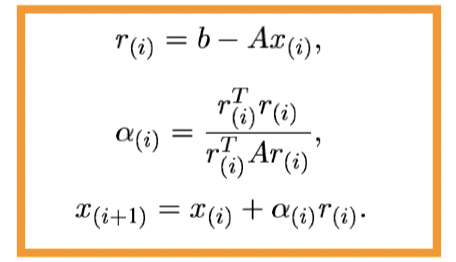

- compute

, which is the direction - Select next point

How to decide

Choose the vector which has the minimized increase of

Final procedure

Convergency

CG -- Eigenvalues (Eigenvectors) and convergency

Several Special cases

We are using the Final procedure.

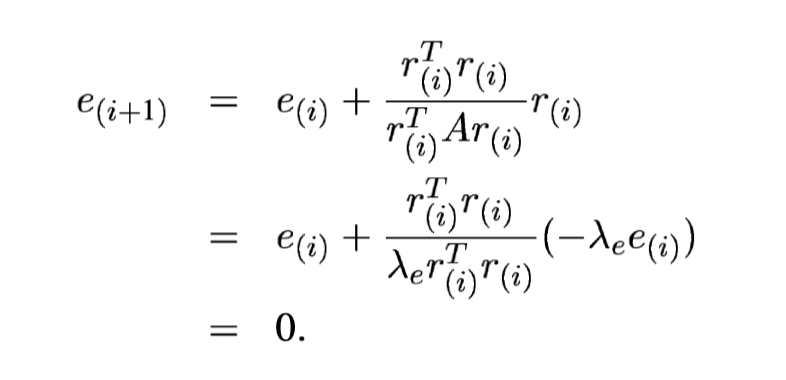

Special Case 1

If

It takes only one step to converge to the exact solution

General Formula

If

Then

Special case 2

If

Special case 3

All the eigenvectors have a common eigenvalue

General Convergency

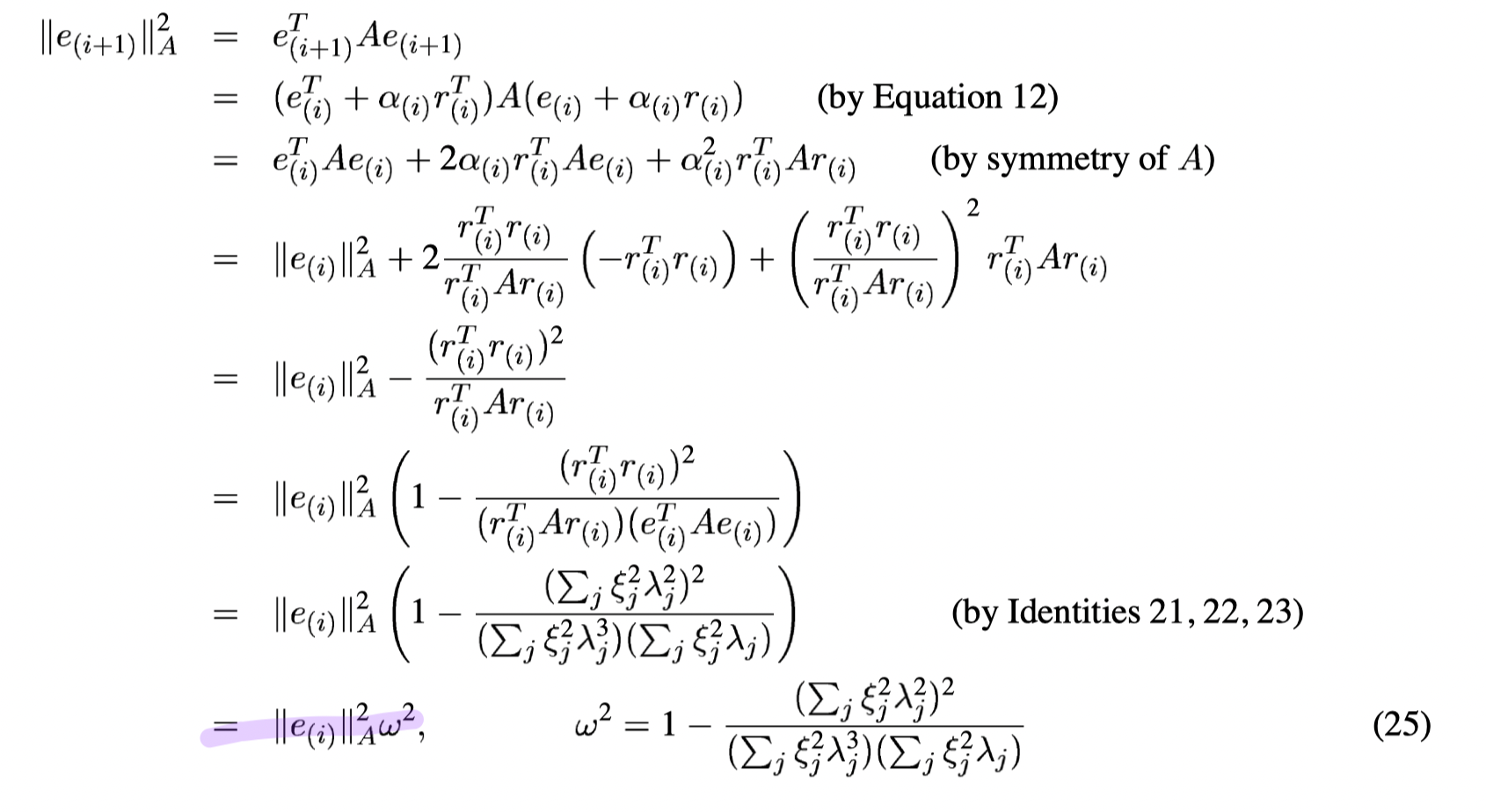

We have the formula General Formula

We define energy norm to help

Transfer minimizing

Recall for an arbitrary point

(From Conjugate Gradient >

{ #6f2cce}

)

That is

Thus, minimizing

Recall

Express

Here we only consider

We define

- the spectral condition number as

, - The slop of

as

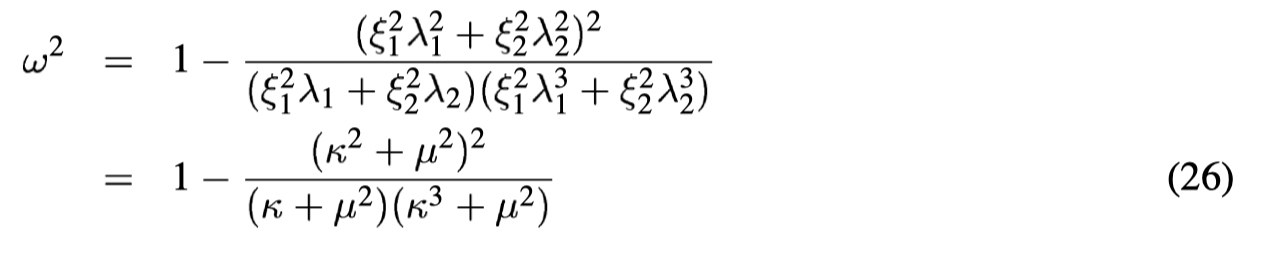

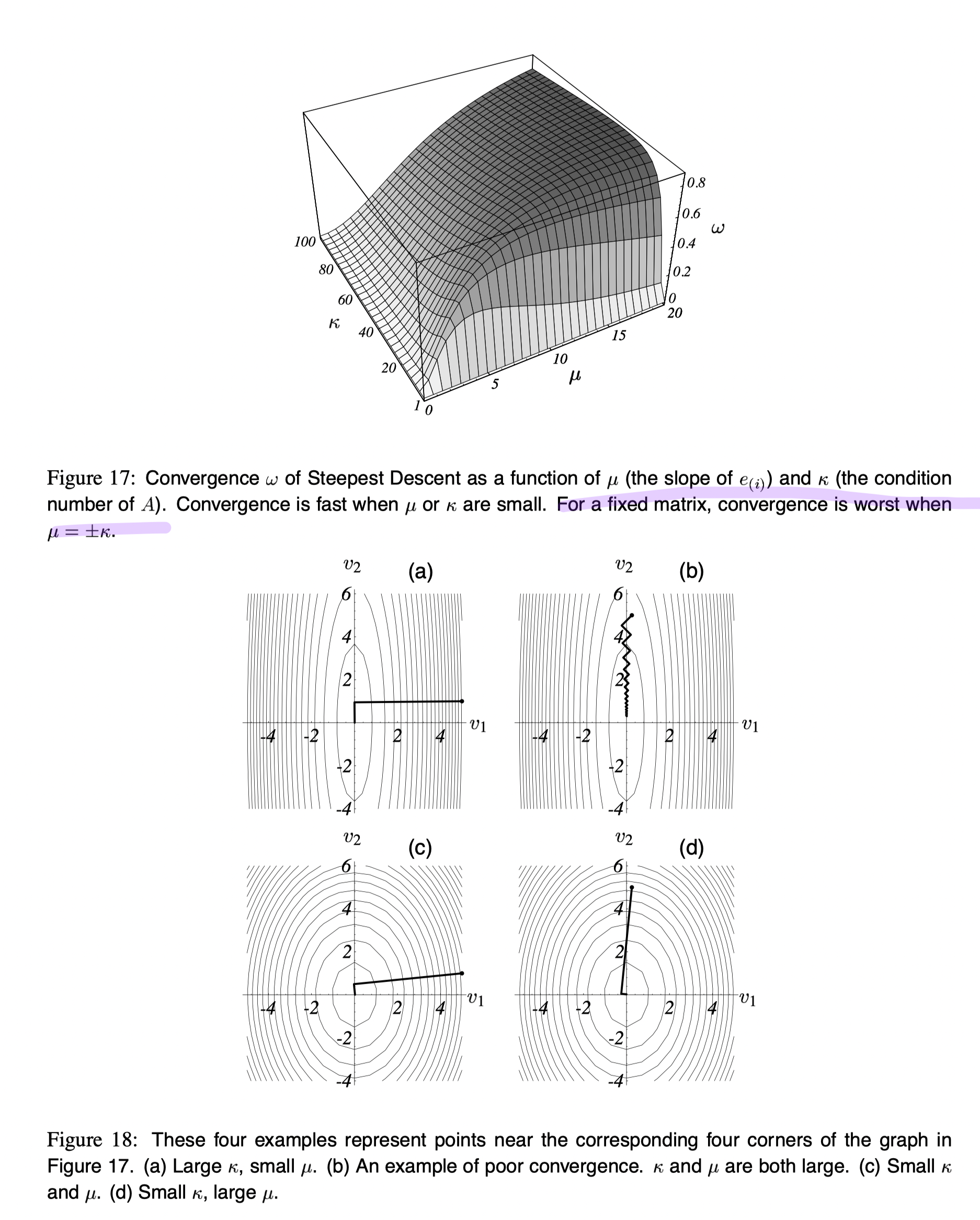

Plotting w.r.t.

The worst case is when

That is, the larger its condition number