Tiled Matrix Multiplication -- CUDA implementation

- https://penny-xu.github.io/blog/tiled-matrix-multiplication

- https://marek.ai/matrix-multiplication-on-cpu.html

Aim

Use tiled MM can reduce global memory access in GPUs.

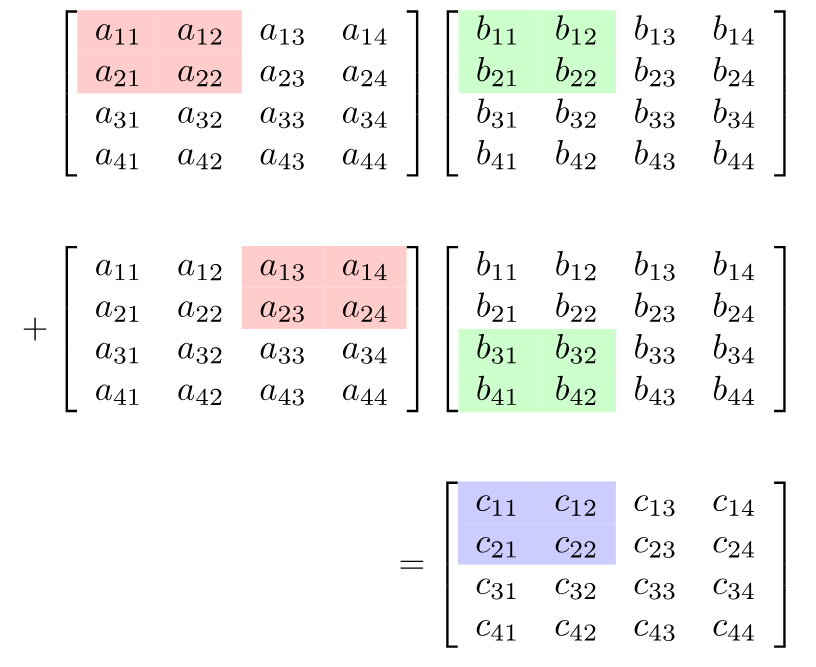

Mathematical operation of tiling MM

- Link: https://leimao.github.io/blog/CUDA-Matrix-Multiplication/

Assuming the tiling block size is, then

Algorithm

for (int m = 0; m<M/T; m+=T) { /* Step through the blocks along A rows */

for (int n=0; n<N/T; n+=T) {/* Step through the blocks along B columns */

for(int k=0; k<K/T; k+=T) { /*Step through A'columns and B's rows to complete the computations for a block of C */

/*inside the block, do the Matrix Multiplication*/

for(int mt=m; mt< m+T && mt < M; mt++){

for(int nt=n; nt<n+T && nt < N; nt++){

for(int kt = k; kt<k+T && kt<K; kt++){

C[mt*M+nt] += A[mt * M + kt] * B[kt*K+nt]; /* += complete each block */

}

}

}

}

}

}

On GPUs

notes/CUDA - grids, blocks and threads

Each thread is responsible for one element as well

Access each element of matrices in the thread

int row = by*blockDim.y + ty;

int col = bx*blockDim.x + tx;

/*

A[row][tx]

B[ty][col]

/*